From Principles to Practice

I don’t just teach AI ethics. I build the architecture that ensures your technology serves life.

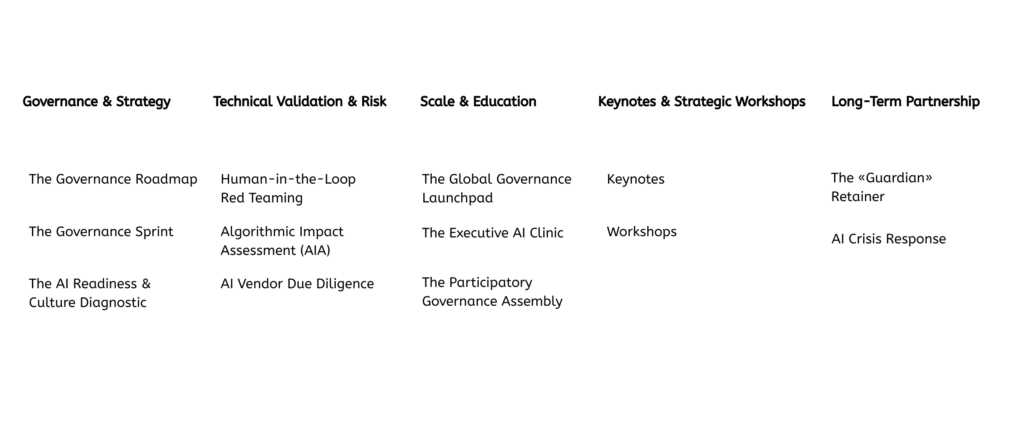

Whether you need a complete governance transformation, a technical audit before launch, or to equip your entire network—I have a structured path to get you there.

Governance & Strategy

Helping organizations move from high-level principles to operational safety. These engagements create the cultural and structural foundation for responsible AI use.

The Governance Roadmap

The Full Transformation

Go from anxiety to alignment. In 4-6 months, build a governance system your whole organization believes in

The Problem:

You know AI governance matters. But every time you try to write a policy, it either gathers dust or creates more confusion. Your teams are anxious. Your leadership is uncertain. And the technology keeps changing.

The Promise:

A complete cultural and technical transformation. We don’t just plan governance—we build it and embed it into your daily workflows. By the end, your organization will be fully «governance competent.»

The Process:

Using my Look, Create, Build methodology:

Phase 1: LOOK (Opening Space to See What Is)

- Deep listening interviews across your organization

- Surfacing tensions, desires, and hidden risks

- Mapping your current AI inventory and «Shadow AI»

- Creating common ground for what comes next

Phase 2: CREATE (The Messy Space of Collaborative Ideas)

- Co-creating bespoke Responsible AI principles—from the bottom up

- Defining «The Tree»—who holds the governance conversation

- Establishing your «Traffic Light» system (Red/Yellow/Green)

- Drafting your living AI Policy

Phase 3: BUILD (Bringing It to Life)

- Conducting risk assessments on 3-5 priority tools

- Training staff across departments

- Embedding governance controls into daily workflows

- Handover and transition to internal ownership

Who It’s For:

- Large NGOs and Foundations

- Climate Tech organizations

- Mission-driven enterprises

- Banks and financial institutions with social mandates

The Outcome:

A living system—not a document. Your organization has:

- ✓ Board-approved AI Policy

- ✓ Operational Risk Matrix

- ✓ Trained internal «Guardians»

- ✓ Clear decision-making protocols

- ✓ Ongoing governance rhythms

Testimonial:

«Ayşegül didn’t just draft our organisational AI policy—she helped us really define our ethical stance and synthesised all the complex and diverse perspectives on the team. She was a real ‘strategy partner’, helping us translate complex AI risks into a super clear and actionable Policy and Manifesto!»

— Organization Leader

Timeline: 4-6 months

The Governance Sprint

The Launchpad

Go from confusion to a clear Plan of Action in 4 weeks

The Problem:

You need to start somewhere—but you don’t have the budget or bandwidth for a full transformation right now.

The Promise:

A focused, 4-week engagement that gives you the blueprint. You’ll walk away with a Version 1.0 AI Policy and a clear roadmap for what to build next.

The Process:

- Week 1: Tensions Workshop (Leadership team)

- Week 2: Deployment of the Toolkit (Policy & Risk templates)

- Week 3: Review & Refinement of your draft

- Week 4: Final Roadmap Handover

Who It’s For:

- Organizations that need to get started fast

- Teams with limited budgets but real urgency

- Leaders who want to test the approach before committing to full transformation

The Outcome:

- ✓ Version 1.0 AI Policy

- ✓ Initial Risk Assessment Template

- ✓ Clear To-Do List for the future

- ✓ Foundation to build on

Timeline: 4 weeks

The AI Readiness & Culture Diagnostic

The «Iceberg» Audit

Uncover the unknown unknowns—before you write the rule

The Problem:

You sense something is off. There’s resistance you can’t name. Tools being used that leadership doesn’t know about. Tensions simmering beneath the surface. You need visibility before you can act.

The Promise:

A deep-dive diagnostic that surfaces Shadow AI, cultural resistance, and hidden risks. Total visibility for your Board—so you can make informed decisions about what to build next.

The Process:

- 10-15 confidential stakeholder interviews

- Shadow AI mapping (what’s actually being used)

- Cultural readiness assessment

- «State of the Nation» Gap Analysis Report

Who It’s For:

- Organizations feeling «stuck»

- Teams facing internal conflict about AI

- Leadership that needs the full picture before investing in governance

The Outcome:

- ✓ Complete Shadow AI Map

- ✓ Cultural Readiness Assessment

- ✓ Risk & Gap Analysis Report

- ✓ Strategic Recommendations for next steps

Testimonial:

«Ayşegül delivered a friendly and informative session that triggered deep reflection at our company and influenced our AI strategy. We would be very happy to work together in the future.»

— Corporate Strategy Team

Timeline: 3-4 weeks

Technical Validation & Risk

For builders and buyers of high-stakes AI. Rigorous evaluation before you deploy—or before you sign the contract.

Human-in-the-Loop Red Teaming

Stress-Testing for Real-World Harm

We break your model before the public does.

The Problem:

You’re about to deploy an AI system. Your technical team says it works. But you don’t know what happens when real humans—including bad actors—interact with it. You need someone to find the failures before they become headlines.

The Promise:

Rigorous adversarial testing that identifies bias, hallucinations, and safety failures. We stress-test your system sociologically and technically—then give you a clear strategy to fix the design flaws.

The Process:

- Threat Modeling: Defining your specific «Bad Actors» and «Vulnerable Users»

- Adversarial Testing: Manual and scripted testing for bias, hallucinations, jailbreaks, and safety failures

- Documentation Review: Examining training data provenance and model cards

- Risk Mitigation Report: Prioritized findings with actionable fixes

Who It’s For:

- AI Vendors launching new products

- Tech-for-Good startups

- NGOs deploying chatbots or automated decision systems

- Organizations buying enterprise AI tools

The Outcome:

- ✓ Comprehensive vulnerability report

- ✓ Prioritized risk findings

- ✓ Mitigation strategy and design recommendations

- ✓ A battle-tested system ready for launch

Testimonial:

«Aysegul led a fantastic and interesting workshop that delivered what was promised in the program description. She had in-depth knowledge of red teaming that she made accessible to a diverse audience, carefully explained why red teaming mattered and some of the risks associated with the concept, and she concluded with helping to get us hands-on experience with performing red teaming tasks. I acquired both new knowledge and skills that I plan to use in my work. I wish the session was longer and I would have paid for it!»

— Workshop Participant

Timeline: 2-4 weeks (depending on scope)

Algorithmic Impact Assessment (AIA)

Regulatory Compliance & White Box Review

Get «audit-ready» for regulators and donors.

The Problem:

You’re operating in a regulated space—or you will be soon. The EU AI Act is here. NYC Local Law 144 applies to hiring tools. Your funders are asking harder questions. You need rigorous documentation that proves your system is fair, transparent, and compliant.

The Promise:

A structured evaluation aligned with EU AI Act and NIST AI RMF standards. We examine your system’s fairness, document its limitations, and identify regulatory gaps—giving you the evidence you need for compliance.

The Process:

- Training data provenance review

- Fairness metric evaluation (demographic analysis where applicable)

- Transparency and explainability assessment

- Regulatory gap analysis

- Formal AIA Report

Who It’s For:

- Public sector organizations

- Health Tech deploying clinical AI

- HR Tech using automated hiring tools

- Any «high-risk» use case under EU AI Act

The Outcome:

- ✓ Formal Algorithmic Impact Assessment Report

- ✓ Fairness evaluation documentation

- ✓ Regulatory compliance gap analysis

- ✓ «Audit-Ready» status for regulators and donors

Testimonial:

«The session provided a solid overview of measuring impacts and assessing risks, helping me understand the growing importance of this field. The real-world examples were especially insightful, highlighting the need for regulations and control.»

— Workshop Participant

Timeline: 3-5 weeks per algorithm

AI Vendor Due Diligence

The Procurement Guard

Know exactly what you’re buying before you sign.

The Problem:

Your team wants to buy an enterprise AI tool—Copilot, Salesforce Einstein, an industry chatbot. The vendor’s sales deck looks impressive. But you don’t have the technical expertise to ask the hard questions. You need someone in your corner before you sign the contract.

The Promise:

Independent evaluation of any AI tool you’re considering. We review the technical documentation, interrogate the vendor on your behalf, and tell you exactly what the red flags are.

The Process:

- Technical documentation review

- «Hard Questions» vendor interrogation

- Data privacy and security assessment

- «Red Flag» Report with go/no-go recommendation

Who It’s For:

- NGOs and Foundations buying enterprise tools

- Organizations without in-house AI expertise

- Procurement teams making high-stakes AI purchases

The Outcome:

- ✓ Clear understanding of what you’re actually buying

- ✓ Red Flag Report with specific concerns

- ✓ Negotiation leverage with the vendor

- ✓ Confidence in your procurement decision

Timeline: 1-2 weeks per tool

Scale & Education

For networks, foundations, and leaders. Move entire ecosystems from awareness to practice—or build your own AI leadership capacity.

The Global Governance Launchpad

The Cohort Model

Equip your entire network with AI Governance in one sprint.

The Problem:

You’re a foundation, federation, or network organization. Your grantees or members are all facing the same AI challenges—but you can’t afford to run individual governance engagements with each of them. You need scalable impact.

The Promise:

A cohort-based accelerator that equips up to 20 organizations with AI governance frameworks simultaneously. Move your whole sector from awareness to compliance.

The Process:

- 3 Live Masterclasses: Tensions, Policy, and Risk

- Toolkit License: All participants receive governance templates

- Group Office Hours: Facilitated peer learning and Q&A

- Individual Check-ins: Brief 1:1 support for each organization

Who It’s For:

- TechSoup and similar intermediary networks

- Foundations with multiple grantees

- Federations and membership organizations

- Sector-wide capacity building initiatives

The Outcome:

- ✓ Up to 20 organizations with governance foundations

- ✓ Shared language and frameworks across your network

- ✓ Peer learning community

- ✓ Scalable sector-wide impact

Timeline: 6-8 weeks

The Executive AI Clinic

Confidential Leadership Coaching

A safe space for leaders to ask the «stupid» questions.

The Problem:

You’re a CEO, Board Member, or Trustee. Everyone expects you to understand AI—but you have questions you can’t ask in public. What’s my personal liability? What should I actually be worried about? How do I lead on something I don’t fully understand?

The Promise:

Private, confidential sessions designed specifically for senior leaders. No judgment. No jargon. Just clarity on what you need to know—and what you need to do.

The Process:

- Session 1: Reality Check — What AI actually is, what’s hype, what’s real

- Session 2: Liability — Your personal and organizational exposure

- Session 3: Strategy — How to lead with confidence

Who It’s For:

- CEOs and Executive Directors

- Board Members and Trustees

- Senior leaders new to AI governance

The Outcome:

- ✓ Clear understanding of AI fundamentals

- ✓ Awareness of personal liability and organizational risk

- ✓ Confidence to lead AI governance conversations

- ✓ Strategic clarity on next steps

Timeline: 3 x 90-minute sessions (flexible scheduling)

The Participatory Governance Assembly

The «Zumbara» Special

Don’t govern for your community—govern with them.

The Problem:

Your organization claims to be «community-led.» But when it comes to AI decisions, the community isn’t in the room. You want to practice what you preach—and involve your beneficiaries in defining the AI red lines.

The Promise:

A facilitated «Citizens’ Assembly» model where your community members—not just your staff—define the principles and boundaries for your AI use. Governance that embodies your values.

The Process:

- Assembly design and participant selection

- Pre-work and context-setting for participants

- Facilitated 1-2 day Assembly

- Synthesis and recommendations report

- Integration into organizational governance

Who It’s For:

- Community-led organizations

- Membership organizations

- NGOs with strong beneficiary relationships

- Organizations committed to participatory practices

The Outcome:

- ✓ Community-defined AI principles

- ✓ Legitimate governance that reflects stakeholder values

- ✓ Deeper trust with your community

- ✓ A model you can repeat for future decisions

Timeline: 4-6 weeks (including design and synthesis)

Keynotes & Strategic Workshops

Bringing the «Spring Cleaning» philosophy to conferences, leadership retreats, and team offsites. Inspiring and practical—designed to shift how your audience thinks about AI.

KEYNOTES

Ideas that shift perspective. Frameworks you can use Monday morning

Signature Talks:

«Spring Cleaning for Your AI Strategy»

Why governance isn’t a document—it’s a culture. The life-centered approach to building AI systems that serve your mission.

«Being Guardians of Principles»

How to move from abstract ethics to operational practice. The Look, Create, Build methodology for organizations ready to lead.

«A Ticket For Your Time Machine»

The choices we’re making today about AI will shape the next century. A reflection on technology, values, and what it means to build responsibly.

Testimonial:

«Ayşegül has a rare ability to bridge the gap between technical AI concepts and social impact. Her keynote was not only inspiring but deeply practical. She engaged our diverse audience and left us with clear takeaways on responsible innovation.»

— Conference Organizer

STRATEGIC WORKSHOPS

Interactive sessions that build shared understanding and momentum

Workshop Options:

The Tensions Workshop (Half-day)

Creating safe space for your team to surface anxieties, desires, and disagreements about AI. The foundation for any governance work.

The Risk Assessment Practicum (Full-day)

Hands-on training in algorithmic impact assessment. Your team learns to identify stakeholders, map potential harms, and prioritize risks.

AI Literacy for Leaders (Half-day)

Demystifying AI for non-technical leadership. What’s real, what’s hype, and what decisions you need to make.

Long-Term Partnership

For organizations that want ongoing support as they grow. Available only to past clients.

The «Guardian» Retainer

Fractional Chief AI Ethics Officer

Insurance. I’m on speed dial to keep you safe as you grow.

The Problem:

You’ve built your governance foundation—but AI doesn’t stand still. New tools emerge. Regulations change. Edge cases arise. You need someone in your corner, continuously.

The Promise:

Ongoing support from someone who knows your organization, your principles, and your risks. I become your fractional AI ethics advisor—available when you need me.

What’s Included:

- Ad-hoc email support (48-hour response)

- Monthly strategy call

- Quarterly AI Inventory audit

- Priority access for urgent questions

Who It’s For:

Past clients who’ve completed a Governance Roadmap, Sprint, or Diagnostic.

Commitment: Minimum 3 months

AI Crisis Response

The Emergency Button

Immediate intervention when things go wrong.

The Problem:

Something happened. An AI system failed publicly. A bias incident hit the news. A data breach exposed your automated decisions. You need expert support—now.

The Promise:

Rapid assessment and containment within 48 hours. We stabilize the situation, develop your response strategy, and support your stakeholder communications.

What’s Included:

- 48-hour Rapid Assessment

- Containment strategy

- Stakeholder communications support

- Post-crisis governance recommendations

Who It’s For:

Past clients who’ve completed a Governance Roadmap, Sprint, or Diagnostic, facing an AI-related crisis.

Timeline: 2-week sprint

Not Sure Where to Start?

Every organization’s journey is different. Some need the full transformation. Some need a focused sprint. Some just need visibility before they can move forward.

Let’s have a conversation about where you are—and what would actually serve you best.